Reliability Assurance

Memfit AI implements a multi-layered defense-in-depth strategy to ensure reliable operation in unpredictable environments. Unlike fragile scripts that crash on error, the system is designed to fail gracefully, recover autonomously, and learn from mistakes. This document provides a comprehensive technical exposition of the reliability mechanisms implemented within the Memfit AI architecture.

Theoretical Foundation

The reliability framework of Memfit AI is grounded in several established principles from distributed systems, cognitive science, and control theory:

- Graceful Degradation: The system continues to operate at reduced capability when partial failures occur, rather than experiencing catastrophic failure.

- Adaptive Control: Real-time feedback loops enable the system to adjust its behavior based on observed outcomes.

- Meta-Cognition: The agent can reason about its own reasoning process, enabling self-correction.

- Cumulative Learning: Each failure contributes to a growing knowledge base that improves future reliability.

1. Adaptive Self-Reflection

Self-reflection is the system's "Immune System." It is not a separate post-process but an integral part of the execution loop, deeply embedded within the reactloops architecture.

1.1 Reflection Level Taxonomy

The system implements a graduated hierarchy of reflection intensities, each with distinct computational costs and diagnostic capabilities:

| Level | Name | Trigger Condition | AI Involvement | Memory Search Depth |

|---|---|---|---|---|

| 0 | None | Reflection disabled | None | None |

| 1 | Minimal | Default for simple actions | None (logging only) | None |

| 2 | Standard | SPIN detection, iteration > 5 | Yes | 2 KB |

| 3 | Deep | Complex action analysis | Yes | 5 KB |

| 4 | Critical | Action execution failure | Yes | 10 KB |

The reflection level is determined dynamically based on multiple factors:

// ReflectionLevel hierarchy (from action_reflection.go)

type ReflectionLevel int

const (

ReflectionLevel_None ReflectionLevel = iota

ReflectionLevel_Minimal // Record execution result only

ReflectionLevel_Standard // Evaluate basic impact

ReflectionLevel_Deep // Detailed environmental analysis

ReflectionLevel_Critical // Root cause analysis for failures

)

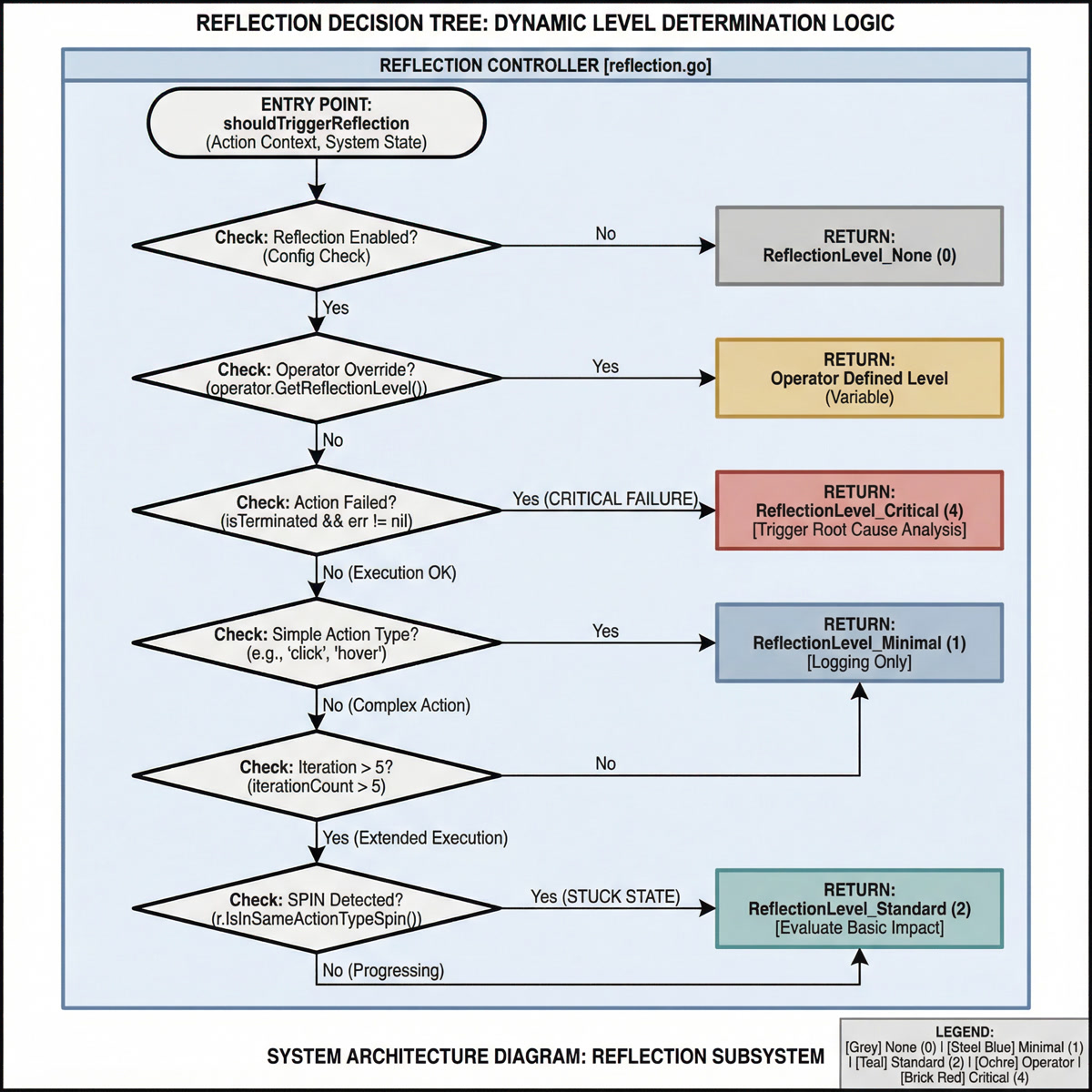

1.2 Trigger Mechanisms

The system monitors execution in real-time and triggers reflection under specific conditions through a sophisticated decision tree:

1.2.1 Primary Trigger: Execution Failure

When an action terminates with an error, the system immediately escalates to Critical reflection level:

// From reflection.go - shouldTriggerReflection

isTerminated, err := operator.IsTerminated()

if isTerminated && err != nil {

// Failure scenario: trigger critical reflection

log.Infof("action[%s] failed, trigger critical reflection", action.ActionType)

return ReflectionLevel_Critical

}

1.2.2 Secondary Trigger: Iteration Threshold

After 5 iterations without task completion, the system enters a heightened monitoring state:

// High iteration count: interval reflection strategy (start after 5 steps)

if iterationCount > 5 {

// Priority check for SPIN condition

if r.IsInSameActionTypeSpin() {

log.Infof("SPIN detected at iteration[%d], trigger immediate reflection", iterationCount)

return ReflectionLevel_Standard

}

// Non-reflection turn: minimal reflection only

return ReflectionLevel_Minimal

}

1.2.3 Tertiary Trigger: Operator-Defined Escalation

Individual action handlers can request specific reflection levels through the operator interface:

operatorLevel := operator.GetReflectionLevel()

if operatorLevel != ReflectionLevel_None {

log.Infof("use action-defined reflection level: %s", operatorLevel.String())

return operatorLevel

}

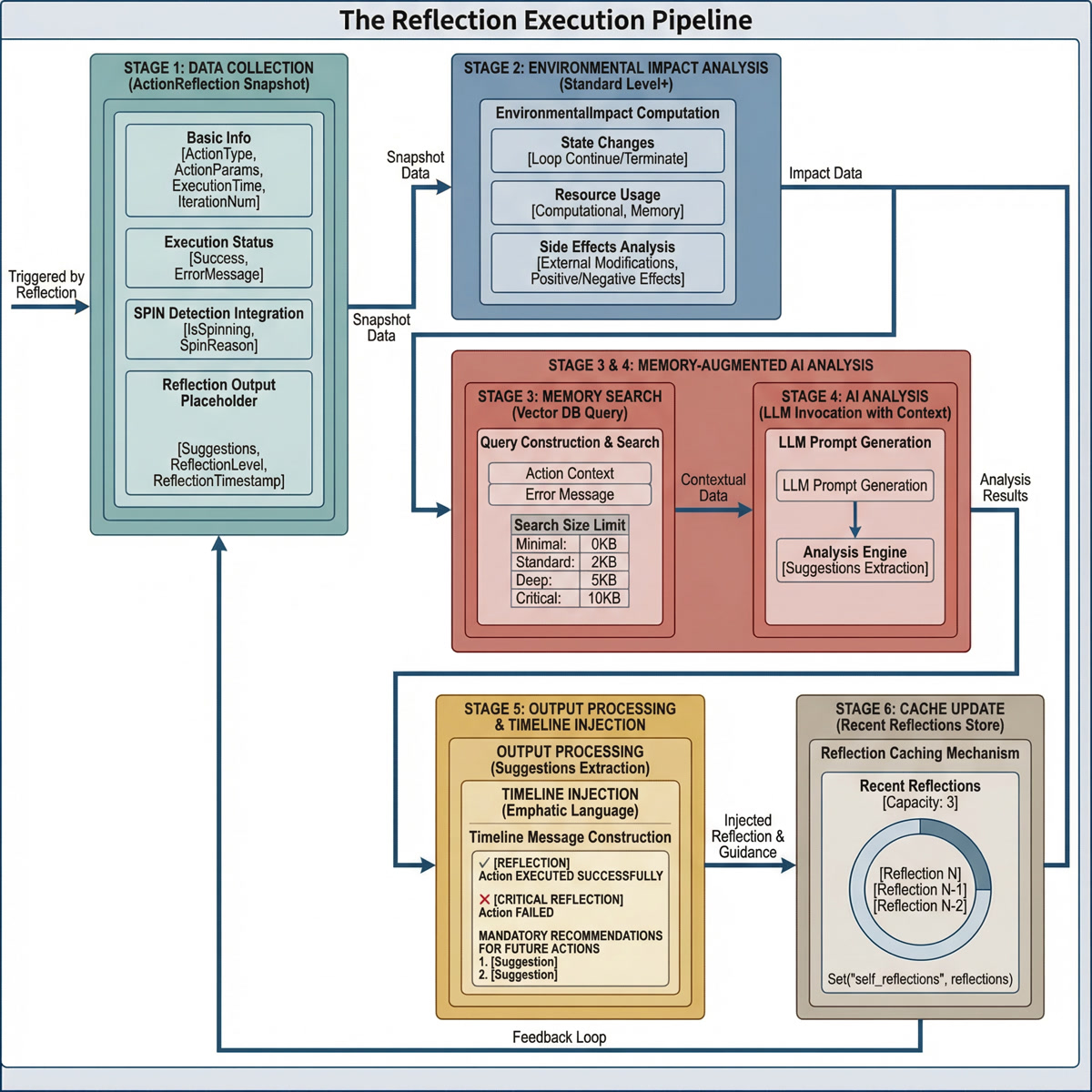

1.3 The Reflection Execution Pipeline

When reflection is triggered, the system executes a multi-stage pipeline:

Stage 1: Data Collection

The system captures a comprehensive snapshot of the current execution state:

// ActionReflection structure (from action_reflection.go)

type ActionReflection struct {

// Basic Information

ActionType string `json:"action_type"`

ActionParams map[string]interface{} `json:"action_params"`

ExecutionTime time.Duration `json:"execution_time"`

IterationNum int `json:"iteration_num"`

Success bool `json:"success"`

ErrorMessage string `json:"error_message,omitempty"`

// Environmental Impact Analysis

EnvironmentalImpact *EnvironmentalImpact `json:"environmental_impact,omitempty"`

// Reflection Output

Suggestions []string `json:"suggestions,omitempty"`

ReflectionLevel string `json:"reflection_level"`

ReflectionTimestamp time.Time `json:"reflection_timestamp"`

// SPIN Detection Integration

IsSpinning bool `json:"is_spinning,omitempty"`

SpinReason string `json:"spin_reason,omitempty"`

}

Stage 2: Environmental Impact Analysis

For Standard level and above, the system analyzes the environmental impact of the executed action:

// EnvironmentalImpact structure (from action_reflection.go)

type EnvironmentalImpact struct {

StateChanges []string `json:"state_changes"`

ResourceUsage map[string]interface{} `json:"resource_usage"`

SideEffects []string `json:"side_effects"`

PositiveEffects []string `json:"positive_effects"`

NegativeEffects []string `json:"negative_effects"`

}

This analysis captures:

- State Changes: Whether the action continued or terminated the loop

- Resource Usage: Computational resources consumed

- Side Effects: External system modifications

- Impact Classification: Positive and negative effects on task progress

Stage 3: Memory-Augmented AI Analysis

For Standard level and above, the system invokes AI-assisted analysis with historical context:

// Memory search depth varies by reflection level (from reflection_memory.go)

switch level {

case ReflectionLevel_Minimal:

return "" // No memory search for minimal level

case ReflectionLevel_Standard:

searchSizeLimit = 2 * 1024 // 2KB

case ReflectionLevel_Deep:

searchSizeLimit = 5 * 1024 // 5KB

case ReflectionLevel_Critical:

searchSizeLimit = 10 * 1024 // 10KB - Critical needs more context

}

The query construction is semantically optimized:

// Build search query based on action context

query := fmt.Sprintf("action '%s' execution analysis failure success pattern",

reflection.ActionType)

if !reflection.Success && reflection.ErrorMessage != "" {

query += " " + reflection.ErrorMessage

}

Stage 4: Timeline Injection

The reflection results are injected into the Timeline using emphatic language to ensure the AI agent prioritizes the guidance:

// From reflection_memory.go - addReflectionToTimeline

if reflection.Success {

timelineMsg.WriteString(fmt.Sprintf("✓ [REFLECTION] Action '%s' EXECUTED SUCCESSFULLY",

reflection.ActionType))

} else {

timelineMsg.WriteString(fmt.Sprintf("✗ [CRITICAL REFLECTION] Action '%s' FAILED",

reflection.ActionType))

}

// Mandatory recommendations with strong language

if len(reflection.Suggestions) > 0 {

timelineMsg.WriteString("MANDATORY RECOMMENDATIONS FOR FUTURE ACTIONS:\n")

for i, suggestion := range reflection.Suggestions {

timelineMsg.WriteString(fmt.Sprintf("%d. %s\n", i+1, suggestion))

}

}

Stage 5: Reflection Caching

Recent reflections are cached for prompt context (limited to 3 most recent):

// Cache recent reflections for prompt context

reflections = append(reflections, reflection)

if len(reflections) > 3 {

reflections = reflections[len(reflections)-3:]

}

r.Set("self_reflections", reflections)

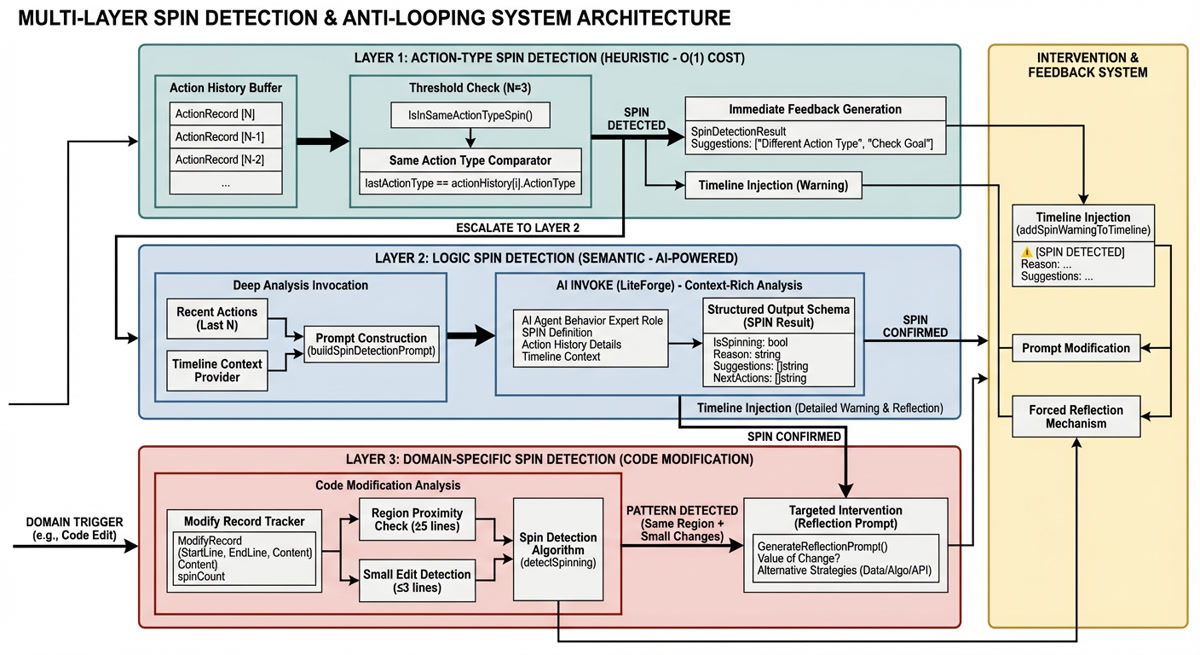

2. Spin Detection & Anti-Looping

One of the most common failures in LLM Agents is getting stuck in infinite loops (e.g., repeatedly trying cat /etc/passwd when permission is denied). Memfit AI employs a dual-layer detection system with both heuristic and semantic analysis.

2.1 Layer 1: Action-Type Spin Detection (Heuristic)

This low-cost detection mechanism operates without AI involvement:

2.1.1 Detection Algorithm

// From spin_detection.go - IsInSameActionTypeSpin

func (r *ReActLoop) IsInSameActionTypeSpin() bool {

r.actionHistoryMutex.Lock()

defer r.actionHistoryMutex.Unlock()

threshold := r.sameActionTypeSpinThreshold

if threshold <= 0 {

threshold = 3 // Default threshold

}

historyLen := len(r.actionHistory)

if historyLen < threshold {

return false

}

// Check if last N actions are all the same type

lastActionType := r.actionHistory[historyLen-1].ActionType

for i := historyLen - threshold; i < historyLen; i++ {

if r.actionHistory[i].ActionType != lastActionType {

return false

}

}

log.Infof("detected same action type spin: %d consecutive actions of type %s",

threshold, lastActionType)

return true

}

2.1.2 Response Strategy

When Layer 1 detection triggers, the system generates immediate feedback:

// SpinDetectionResult structure

type SpinDetectionResult struct {

IsSpinning bool `json:"is_spinning"`

Reason string `json:"reason"`

Suggestions []string `json:"suggestions"`

NextActions []string `json:"next_actions"`

ActionType string `json:"action_type"`

ConsecutiveCount int `json:"consecutive_count"`

}

// Default suggestions for Layer 1 detection

Suggestions: []string{

"尝试使用不同的 Action 类型",

"检查任务目标是否明确",

"考虑是否需要用户澄清",

}

2.2 Layer 2: Logic Spin Detection (Semantic)

When Layer 1 triggers, the system escalates to AI-powered semantic analysis:

2.2.1 Deep Analysis Invocation

// From spin_detection.go - IsInSameLogicSpinWithAI

func (r *ReActLoop) IsInSameLogicSpinWithAI() (*SpinDetectionResult, error) {

// Acquire action history

recentActions := make([]*ActionRecord, r.sameLogicSpinThreshold)

copy(recentActions, r.actionHistory[historyLen-r.sameLogicSpinThreshold:])

// Get Timeline context for analysis

timelineContent := r.getTimelineContentForSpinDetection()

// Build analysis prompt

prompt := r.buildSpinDetectionPrompt(recentActions, timelineContent)

// Invoke AI with structured output schema

outputSchema := []aitool.ToolOption{

aitool.WithBoolParam("is_spinning",

aitool.WithParam_Description("是否发生了 SPIN 情况")),

aitool.WithStringParam("reason",

aitool.WithParam_Description("如果发生了 SPIN,说明原因")),

aitool.WithStringArrayParam("suggestions",

aitool.WithParam_Description("提供打破循环的建议")),

aitool.WithStringArrayParam("next_actions",

aitool.WithParam_Description("具体的下一步操作建议")),

}

action, err := r.invoker.InvokeLiteForge(ctx, "spin_detection", prompt, outputSchema)

// ... process result

}

2.2.2 Context-Rich Prompt Construction

The AI analysis receives comprehensive context:

// From spin_detection.go - buildSpinDetectionPrompt

prompt.WriteString("你是一个 AI Agent 行为分析专家。请分析以下 Action 执行历史,判断是否发生了 SPIN 情况。\n\n")

prompt.WriteString("SPIN 的定义:AI Agent 反复做出相同或相似的决策,没有让任务得到推进。\n\n")

// Include detailed action history

for i, action := range actions {

prompt.WriteString(fmt.Sprintf("### 第 %d 次执行 (迭代 %d)\n", i+1, action.IterationIndex))

prompt.WriteString(fmt.Sprintf("- Action 类型: %s\n", action.ActionType))

prompt.WriteString(fmt.Sprintf("- Action 名称: %s\n", action.ActionName))

prompt.WriteString("- Action 参数:\n")

// ... JSON-formatted parameters

}

// Include Timeline context

if timelineContent != "" {

prompt.WriteString("## Timeline 上下文\n\n")

prompt.WriteString(timelineContent)

}

2.3 Layer 3: Domain-Specific Spin Detection

For specialized scenarios like code modification, the system employs additional heuristics:

2.3.1 Code Modification Spin Detection

// From loop_yaklangcode/spin_detection.go

type ModifyRecord struct {

StartLine int

EndLine int

Content string

}

// Region proximity check (±5 lines)

func isInSameRegion(r1, r2 ModifyRecord) bool {

startDistance := absInt(r1.StartLine - r2.StartLine)

endDistance := absInt(r1.EndLine - r2.EndLine)

return startDistance <= 5 && endDistance <= 5

}

// Small edit detection (≤3 lines)

func isSmallEdit(record ModifyRecord) bool {

lineCount := record.EndLine - record.StartLine + 1

return lineCount <= 3

}

2.3.2 Spin Detection Algorithm

The detection algorithm tracks modification patterns:

// Detection logic (from loop_yaklangcode/spin_detection.go)

func detectSpinning(loop LoopActionHistoryProvider, currentRecord ModifyRecord) (bool, string) {

// Track spin count across iterations

spinCount := parseSpinCount(loop.Get("modify_spin_count"))

// Analyze recent modify_code actions

historyRecords := extractModifyRecords(loop.GetLastNAction(10))

// Check for same-region repeated modifications

isSameRegion := false

isSmallChange := isSmallEdit(currentRecord)

if len(historyRecords) > 0 {

lastRecord := historyRecords[len(historyRecords)-1]

if isInSameRegion(currentRecord, lastRecord) {

isSameRegion = true

}

}

// Spin detection: same region + small changes

if isSameRegion && isSmallChange {

spinCount++

if spinCount >= 3 {

reason := fmt.Sprintf("检测到在第 %d-%d 行附近连续 %d 次小幅修改代码,可能陷入了修改循环",

currentRecord.StartLine, currentRecord.EndLine, spinCount)

return true, reason

}

} else {

spinCount = 0 // Reset on significant change

}

return false, ""

}

2.3.3 Targeted Intervention Prompt

When code modification spin is detected, a specialized reflection prompt is generated:

// From loop_yaklangcode/spin_detection.go - generateReflectionPrompt

func generateReflectionPrompt(record ModifyRecord, reason string) string {

return fmt.Sprintf(`【代码修改空转警告】

%s

请停下来进行反思,回答以下问题:

【问题1:改动价值】

本次修改第 %d-%d 行的目标是什么?与上几次修改相比,有什么新的价值或进展?

【问题2:备选路径】

如果不继续修改这几行代码,还有哪些其他解决方案?

请至少列出 3 个不同层面的策略:

- 数据/变量层面的调整

- 算法/逻辑层面的重构

- 接口/API 调用方式的改变

- 使用不同的库或工具函数

【问题3:搜索建议】

强烈建议在继续修改前,先执行以下搜索以寻找正确的代码模式...

【行动建议】

请选择收益最高、风险最低的一个策略,并说明理由。

不要再继续在同一位置反复尝试小幅修改!`,

reason, record.StartLine, record.EndLine)

}

2.4 SPIN Warning Injection

When SPIN is detected, the system physically modifies the agent's context:

// From reflection.go - addSpinWarningToTimeline

func (r *ReActLoop) addSpinWarningToTimeline(reflection *ActionReflection) {

if !reflection.IsSpinning {

return

}

var msg strings.Builder

msg.WriteString("⚠️ [SPIN DETECTED] 检测到 AI Agent 陷入循环\n\n")

msg.WriteString(fmt.Sprintf("**Action 类型**: %s\n", reflection.ActionType))

msg.WriteString(fmt.Sprintf("**原因**: %s\n\n", reflection.SpinReason))

if len(reflection.Suggestions) > 0 {

msg.WriteString("**建议**:\n")

for i, suggestion := range reflection.Suggestions {

msg.WriteString(fmt.Sprintf("%d. %s\n", i+1, suggestion))

}

}

// Add to Timeline with high-priority event type

invoker.AddToTimeline("logic_spin_warning", msg.String())

}

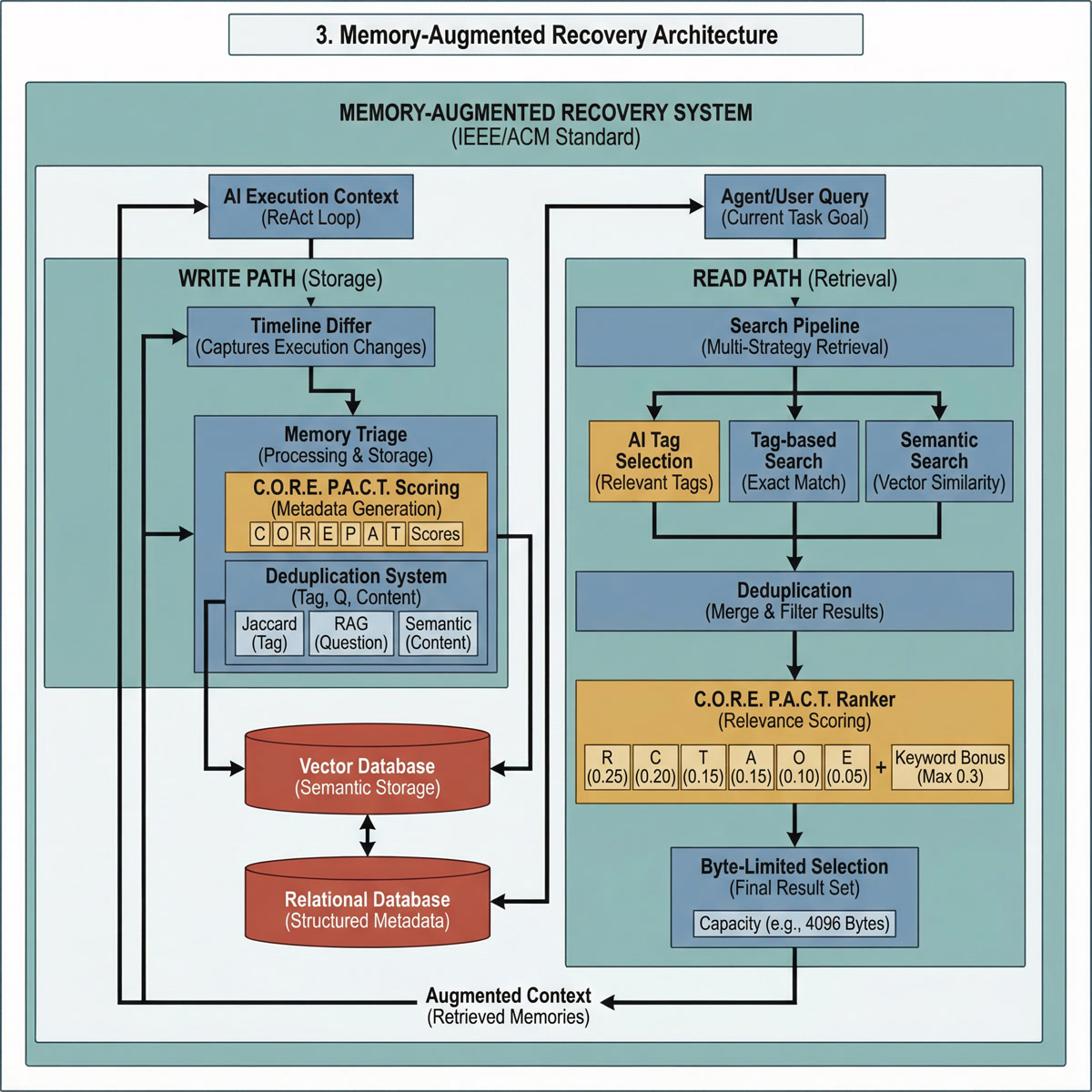

3. Memory-Augmented Recovery

When the agent encounters a problem, it doesn't just rely on its pre-trained weights. It consults the Long-Term Vector Memory—the "Black Box Recorder" of past missions.

3.1 Memory Entity Structure

Each memory is stored with rich metadata enabling sophisticated retrieval:

// MemoryEntity structure (conceptual representation)

type MemoryEntity struct {

Id string `json:"id"`

Content string `json:"content"`

Tags []string `json:"tags"`

PotentialQuestions []string `json:"potential_questions"`

CreatedAt time.Time `json:"created_at"`

// C.O.R.E. P.A.C.T. Scoring Dimensions

C_Score float64 `json:"c_score"` // Connectivity

O_Score float64 `json:"o_score"` // Origin

R_Score float64 `json:"r_score"` // Relevance

E_Score float64 `json:"e_score"` // Emotion

P_Score float64 `json:"p_score"` // Preference

A_Score float64 `json:"a_score"` // Actionability

T_Score float64 `json:"t_score"` // Temporality

}

3.2 The C.O.R.E. P.A.C.T. Scoring Framework

The system employs a 7-dimensional scoring framework to evaluate memory importance and relevance:

| Dimension | Full Name | Weight | Description |

|---|---|---|---|

| R | Relevance | 0.25 | How critical is this to current goals? |

| C | Connectivity | 0.20 | How many other memories is this connected to? |

| T | Temporality | 0.15 | How long should this be retained? |

| A | Actionability | 0.15 | Can AI learn and improve from this? |

| P | Preference | 0.10 | Binding to user's personal style? |

| O | Origin | 0.10 | Source reliability? |

| E | Emotion | 0.05 | User's emotional state? |

3.2.1 Scoring Scale Definitions

Each dimension uses a normalized 0.0-1.0 scale with semantic ranges:

T - Temporality (时效性):

0.0-0.3: Transient memory - Only valid for current session0.3-0.6: Short-term memory - Valid within days/weeks0.6-0.8: Mid-term memory - Stable preferences0.8-1.0: Long-term/Core memory - Unchanging identity

A - Actionability (可操作性):

0.0-0.3: Low value - Simple facts, no learning value0.3-0.6: Implicit feedback - Behavioral patterns0.6-0.8: Explicit feedback - Direct user evaluation0.8-1.0: Generalizable rule - Clear instructions for future

C - Connectivity (关联度):

0.0-0.3: Isolated memory - One-time fact0.3-0.6: Linearly connected - Sequential relationship0.6-0.8: Thematic node - Key information in a theme0.8-1.0: Core hub - Connects multiple domains

3.3 Memory Retrieval Pipeline

The retrieval process combines multiple search strategies:

// From aimemory_search_memory.go - searchMemoryWithAIOption

func (t *AIMemoryTriage) searchMemoryWithAIOption(origin any, bytesLimit int, disableAI bool) (*SearchMemoryResult, error) {

var allMemories []*MemoryEntity

// Step 1: AI-powered tag selection

if !disableAI {

relevantTags, err = t.SelectTags(ctx, queryText)

}

// Step 2: Tag-based retrieval

if len(relevantTags) > 0 {

tagMemories, err := t.SearchByTags(relevantTags, false, 20)

allMemories = append(allMemories, tagMemories...)

}

// Step 3: Semantic similarity search

semanticResults, err := t.SearchBySemantics(queryText, 15)

for _, result := range semanticResults {

allMemories = append(allMemories, result.Entity)

}

// Step 4: Deduplication

uniqueMemories := t.deduplicateMemories(allMemories)

// Step 5: C.O.R.E. P.A.C.T. ranking

rankedMemories := t.rankMemoriesByRelevance(uniqueMemories, queryText)

// Step 6: Byte-limited selection

selectedMemories, totalContent, contentBytes := t.selectMemoriesByBytesLimit(rankedMemories, bytesLimit)

return &SearchMemoryResult{

Memories: selectedMemories,

TotalContent: totalContent,

ContentBytes: contentBytes,

}, nil

}

3.4 Relevance Score Calculation

The ranking algorithm combines C.O.R.E. P.A.C.T. scores with keyword matching:

// From aimemory_search_memory.go - calculateRelevanceScore

func (t *AIMemoryTriage) calculateRelevanceScore(memory *MemoryEntity, query string) float64 {

// C.O.R.E. P.A.C.T. weighted combination

weights := map[string]float64{

"R": 0.25, // Relevance - most important

"C": 0.20, // Connectivity

"T": 0.15, // Temporality

"A": 0.15, // Actionability

"P": 0.10, // Preference

"O": 0.10, // Origin

"E": 0.05, // Emotion

}

relevanceScore := weights["R"]*memory.R_Score +

weights["C"]*memory.C_Score +

weights["T"]*memory.T_Score +

weights["A"]*memory.A_Score +

weights["P"]*memory.P_Score +

weights["O"]*memory.O_Score +

weights["E"]*memory.E_Score

// Keyword matching bonus (capped at 0.3)

contentBonus := t.calculateKeywordBonus(memory, query)

return min(1.0, relevanceScore + contentBonus)

}

3.5 Keyword Matching System

The keyword bonus calculation uses multiple matching strategies:

// From aimemory_search_memory.go - calculateKeywordBonus

func (t *AIMemoryTriage) calculateKeywordBonus(memory *MemoryEntity, query string) float64 {

contentBonus := 0.0

// 1. Content keyword matching (weight: 0.1)

contentMatchScore := t.keywordMatcher.MatchScore(query, memory.Content)

contentBonus += contentMatchScore * 0.1

// 2. Tag keyword matching (weight: 0.08)

tagContent := strings.Join(memory.Tags, " ")

tagMatchScore := t.keywordMatcher.MatchScore(query, tagContent)

contentBonus += tagMatchScore * 0.08

// 3. Question keyword matching (weight: 0.05)

questionContent := strings.Join(memory.PotentialQuestions, " ")

questionMatchScore := t.keywordMatcher.MatchScore(query, questionContent)

contentBonus += questionMatchScore * 0.05

// 4. Direct keyword presence (weight: 0.05)

if t.keywordMatcher.ContainsKeyword(query, memory.Content) {

contentBonus += 0.05

}

// 5. All keywords match bonus (weight: 0.03)

if t.keywordMatcher.MatchAllKeywords(query, memory.Content) {

contentBonus += 0.03

}

return min(0.3, contentBonus) // Cap at 0.3

}

3.6 Memory Deduplication System

The system employs a multi-dimensional deduplication approach:

// From aimemory_triage_saving.go - BatchIsRepeatedMemoryEntities

func (t *AIMemoryTriage) BatchIsRepeatedMemoryEntities(entities []*MemoryEntity, config *DeduplicationConfig) ([]int, error) {

var nonRepeatedIndices []int

for i, entity := range entities {

// Dimension 1: Tag-based repetition (Jaccard similarity)

tagRepeated, _ := t.checkTagRepetition(entity, config.TagOverlapThreshold)

// Dimension 2: Question-based repetition (RAG search)

questionRepeated, _ := t.checkQuestionRepetition(entity, config.QuestionSimilarityThreshold)

// Dimension 3: Content-based repetition (semantic similarity)

contentRepeated, _ := t.checkContentRepetition(entity, config.ContentSimilarityThreshold)

// Composite judgment: 2+ dimensions matching = duplicate

repetitionScore := 0

if tagRepeated { repetitionScore++ }

if questionRepeated { repetitionScore++ }

if contentRepeated { repetitionScore++ }

if repetitionScore < 2 {

nonRepeatedIndices = append(nonRepeatedIndices, i)

}

}

return nonRepeatedIndices, nil

}

3.6.1 Deduplication Configuration

// DeduplicationConfig with sensible defaults

type DeduplicationConfig struct {

TagOverlapThreshold float64 // Default: 0.8 (Jaccard)

QuestionSimilarityThreshold float64 // Default: 0.85

ContentSimilarityThreshold float64 // Default: 0.9

}

3.7 Learning from Failure

The reliability system is designed for cumulative improvement:

3.7.1 Post-Iteration Memory Processing

// From re-act_mainloop.go - OnPostIteration callback

func onPostIteration(loop *ReActLoop, iteration int, task AIStatefulTask, isDone bool, reason any) {

// Calculate timeline diff

diffStr, err := config.TimelineDiffer.Diff()

if err != nil || diffStr == "" {

return // No new information to record

}

// Contextual input construction

contextualInput := fmt.Sprintf("ReAct迭代 %d/%s: %s\n任务状态: %s\n完成状态: %v\n原因: %v",

iteration,

task.GetId(),

diffStr,

string(task.GetStatus()),

isDone,

reason)

// Intelligent memory processing (includes deduplication, scoring, saving)

err = memoryTriage.HandleMemory(contextualInput)

}

3.7.2 Task Completion Memory Search

When a task completes, the system proactively searches for relevant memories:

// Memory search for completed tasks (from coordinator_invoker.go)

if isDone {

go func() {

// Search for memories related to this task (limit: 4KB)

searchResult, err := memoryTriage.SearchMemory(task.GetUserInput(), 4096)

if err != nil {

return

}

if len(searchResult.Memories) > 0 {

log.Infof("found %d relevant memories for completed task %s",

len(searchResult.Memories), task.GetId())

// Log memory details for debugging

for i, mem := range searchResult.Memories {

log.Infof("relevant memory %d: %s (C=%.2f, R=%.2f)",

i+1, mem.Content[:100], mem.C_Score, mem.R_Score)

}

}

}()

}

4. Reliability Metrics & Monitoring

4.1 Comprehensive Mechanism Matrix

| Mechanism | Detection Trigger | Response Action | Computational Cost | Purpose |

|---|---|---|---|---|

| Self-Reflection (Minimal) | Default | Log only | O(1) | Execution tracking |

| Self-Reflection (Standard) | Iteration > 5, SPIN | AI analysis + Timeline injection | O(n) API calls | Behavioral adjustment |

| Self-Reflection (Critical) | Execution failure | Full root cause analysis | O(n) API + 10KB memory | Error recovery |

| Action Spin (Layer 1) | Same(Action) × N | Immediate warning | O(1) | Break mechanical loops |

| Logic Spin (Layer 2) | Layer 1 trigger | AI-powered analysis | O(n) API calls | Break cognitive stalemates |

| Domain Spin (Layer 3) | Same region × 3 | Specialized intervention | O(1) | Domain-specific recovery |

| Memory Recovery | New task / Failure | Vector search + ranking | O(log n) search | Experience reuse |

| Deduplication | Memory save | Multi-dimensional check | O(n²) comparison | Storage efficiency |

4.2 Cascading Defense Strategy

The reliability mechanisms form a cascading defense:

Level 0: Minimal Reflection (Always On)

↓ (on failure or iteration > 5)

Level 1: Standard Reflection + Layer 1 SPIN Detection

↓ (on SPIN trigger)

Level 2: AI-Powered Logic Analysis

↓ (on domain-specific patterns)

Level 3: Specialized Intervention

↓ (always)

Level 4: Memory-Augmented Recovery

4.3 Key Reliability Guarantees

- No Silent Failures: Every execution error triggers at minimum a

Criticalreflection. - Loop Termination: The multi-layer SPIN detection ensures loops are detected within N iterations (configurable, default N=3).

- Context Preservation: All significant events are recorded to the Timeline and optionally persisted to long-term memory.

- Progressive Degradation: As reliability mechanisms activate, computational resources scale proportionally.

- Cross-Session Learning: Memory entities persist across sessions, enabling continuous improvement.

5. Conclusion

The Memfit AI reliability architecture represents a synthesis of established software engineering principles with novel AI-specific mechanisms. Through the combination of:

- Graduated Reflection Levels for proportional diagnostic depth

- Multi-Layer SPIN Detection for comprehensive loop prevention

- C.O.R.E. P.A.C.T. Memory Framework for intelligent experience retrieval

- Timeline-Based Context Injection for behavioral guidance

The system achieves a level of operational reliability that transcends traditional AI agent implementations. The architecture is designed not merely to prevent failures, but to learn from them, building a cumulative knowledge base that improves reliability over time.

This defense-in-depth approach ensures that Memfit AI can operate robustly in unpredictable real-world environments, gracefully handling errors, avoiding infinite loops, and leveraging past experience to navigate novel challenges.